In this guest article, James Sharpe, MMATH, Lead for Big Data & Security at Zenotech, explores the application of AI technology to the challenge of computational mesh generation and the implications for engineering sectors.

How can artificial intelligence (AI) and high-performance computing (HPC) solve mesh generation, one of the most commonly referenced problems in computational engineering? A new study has set out to answer this question and create an industry-first AI-mesh application to improve this previously time-consuming and iterative process. It has resulted in a mesh that is five to ten times more efficient than when generated manually.

AI is attracting all the buzz right now, but inevitably, with the hype, the field has become awash with applications that are not designed to solve existing problems. There is undoubtedly a great temptation to use AI to improve CFD methods, often based on the raw substitution of AI data for simulation data. However, these tend not to work effectively, or to require orders of magnitude more training data than would be necessary to run the simulation.

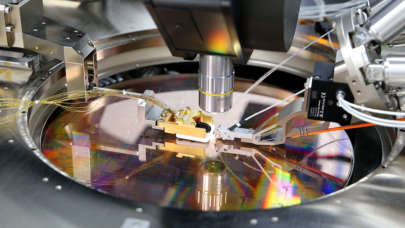

This investigation (led by Zenotech with AI specialists, AlgoLab) began with a comprehensive assessment of the potential for deploying AI technology into digital engineering processes. After a series of down-selects, the automated generation of computational meshes for a specialist application domain appeared as the likely candidate.

The project concentrated on creating a reusable framework for training AI algorithms with real datasets. The process generated has been applied to the task of creating meshes for wind energy resource assessment, which is typically a time-pressured activity, where turnaround and accuracy are business-critical.

The new meshing process makes use of a set of rules, derived from physical properties of the terrain (height, gradient, curvature, terrain type) and the location of a specific point of interest. In the case of wind energy, this includes met masts, likely turbine locations or other existing data sources. Each rule is applied to every point on the terrain specification, with the AI algorithm responsible for determining the relative importance of each rule at each point and the appropriate set of parameter values that convert rules into mesh spacing. The resulting set of meshing instructions has O(1 million) variables for any given test case.

As the training dataset relies on the repeated application of the real CFD-based wind analysis process (which is computationally expensive) and a comparison with a known highly accurate solution, the AI approach is designed to evaluate the minimum number of points to learn the parameter space. A Gaussian Process Model was selected as the most natural choice for this problem.

The implementation of the AI process makes use of the GPFlowOpt Python library (Knudde et al, 2017) using TensorFlow, with a Matern52 statistical covariance function. An initial 300 points were selected using LatinHyperCube sampling (equivalent to N rooks on a chessboard without threatening each other). New points to be evaluated are implemented using Hypervolume-based Probability of Improvement method [Ivo Couckuyt et al, 2014l] providing a fast calculation of the multi-objective probability of improvement and expected improvement criteria for Pareto optimization. (see Journal of Global Optimization, Vol 60, pp 575 – 594).

The Hypervolume method constructs a Pareto front to select the next points to evaluate. Two objective functions are defined to be minimized: (i) the cell count in generated mesh (a good measure of the expense of the CFD simulation), and (ii) the RMS error of the generated flow solution at experimental data points. The resulting Pareto front and associated parameter settings at each point compose a lookup table that provides the optimal meshing rules for a given level of accuracy.

The process can now be run automatically, and typically generates a mesh that is five to 10 times more efficient than one created manually. A vast number of training datasets have been produced in the process. Another benefit is that the process parameters are generic and therefore can be applied to new test cases without the need for additional training.

The use of commodity computing and advanced hardware via EPIC, an online portal to high performance computing was a vital component. This delivers the scalability and processing power to (affordably) train datasets based on numerical experiments.

The results have led to process improvements that have already been deployed on two commercial projects, supporting growth and export in the wind energy sector. These types of projects have typically relied on lower-fidelity computational engineering methods to deliver timely results, and the new process makes it possible to deploy high-fidelity methods in the same timescale.

The capability developed will now be further extended to include more data feedback to the automation process, and these methods can now be transferred to other engineering sectors.

About the Author

James Sharpe is a specialist in computer science and software engineering at Zenotech Ltd. While at BAE Systems Advanced Technology Centre, James developed new mathematical algorithms for the latest in high performance computing hardware and led development teams in Applied Intelligence – working at the forefront of cybersecurity. James is the Zenotech lead for Big Data and Security.