On October 1 of this year, IonQ became the first pure-play quantum computing start-up to go public. At this writing, the stock (NYSE: IONQ) was around $15 and its market capitalization was roughly $2.89 billion. Co-founder and chief scientist Chris Monroe says it was fun to have a few of the company’s roughly 100 employees travel to New York to ring the opening bell of the New York Stock Exchange. It will also be interesting to listen to IonQ’s first scheduled financial results call (Q3) on November 15.

IonQ is in the big leagues now. Wall Street can be brutal as well as rewarding, although these are certainly early days for IonQ as a public company. Founded in 2015 by Monroe and Duke researcher Jungsang Kim — who is the company CTO — IonQ now finds itself under a new magnifying glass.

How soon quantum computing will become a practical tool is a matter of debate, although there’s growing consensus that it will, in fact, become such a tool. There are several competing flavors (qubit modality) of quantum computing being pursued. IonQ has bet that trapped ion technology will be the big winner. So confident is Monroe that he suggests other players with big bets on other approaches – think superconducting, for example – are waking up to ion trap’s advantages and are likely to jump into ion trap technology as direct competitors.

In a wide-ranging discussion with HPCwire, Monroe talked about ion technology and IonQ’s (roughly) three-step plan to scale up quickly; roadblocks facing other approaches (superconducting and photonic); how an IonQ system with about 1,200 physical qubits and home-grown error-correction will be able to tackle some applications; and why IonQ is becoming a software company and that’s a good thing.

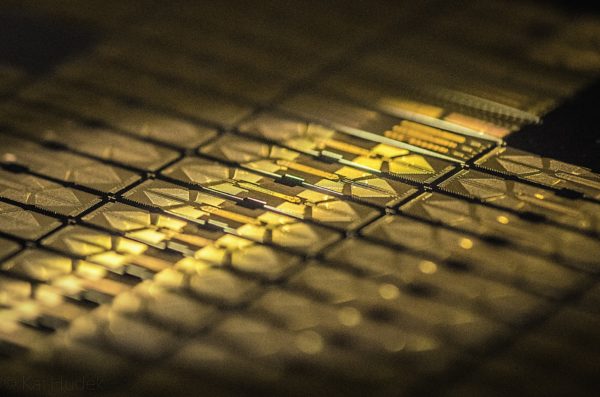

In ion trap quantum computing, ions are held in position by magnetic forces where they can be manipulated by laser beams. IonQ uses ytterbium (Yb) atoms. Once the atoms are turned into ions by stripping off one valence electron, IonQ use a specialized chip called a linear ion trap to hold the ions precisely in 3D space. Literally, they sort of float above the surface. “This small trap features around 100 tiny electrodes precisely designed, lithographed, and controlled to produce electromagnetic forces that hold our ions in place, isolated from the environment to minimize environmental noise and decoherence,” as described by IonQ.

It turns out ions have naturally longer coherence times and therefore require somewhat less error correction and are suitable for longer operations. This is the starting point for IonQ’s advantage. Another plus is that system requirements themselves are less complicated and less intrusive (noise producing) than systems for semiconductor-based, superconducting qubits – think of the need to cram control cables into a dilution refrigerator to control superconducting qubits. That said, all of the quantum computing paradigms are plenty complicated.

![]() For the moment, ion traps using lasers to interact with the qubits is one of the most straightforward approaches. It has its own scaling challenge but Monroe contends modular scaling will solve that problem and leverage ion traps’ other strengths.

For the moment, ion traps using lasers to interact with the qubits is one of the most straightforward approaches. It has its own scaling challenge but Monroe contends modular scaling will solve that problem and leverage ion traps’ other strengths.

“Repeatability [in manufacturing superconducting qubits] is wonderful but we don’t need atomic scale deposition, like you hear of with five nanometer feature sizes on the latest silicon chips,” said Monroe. “The atoms themselves are far away from the chips, they’re 100 microns, i.e. a 10th of a millimeter away, which is miles atomically speaking, so they don’t really see all the little imperfections in the chip. I don’t want to say it doesn’t matter. We put a lot of care into the design and the fab of these chips. The glass trap has certain features; [for example] it’s actually a wonderful material for holding off high voltage compared to silicon.”

IonQ started with silicon-based traps and is now moving to evaporated glass traps.

“What is interesting is that we’ve built the trap to have several zones. This is one of our strategies for scale. Right now, at IonQ, we have exactly one chain of atoms, these are the qubits, and we typically have a template of about 32 qubits. That’s as many as we control. You might ask, how come you’re not doing 3200 qubits? The reason is, if you have that many qubits, you better be able to perform lots and lots of operations and you need very high quality operations to get there. Right now, the quality of our operation is approaching 99.9%. That is a part per 1000 error,” said Monroe.

“This is sort of back of the envelope calculations but that would mean that you can do about 1000 ops. There’s an intuition here [that] if you have n qubits, you really want to do about n2 ops. The reason is, you want these pairwise operations, and you want to entangle all possible pairs. So if you have 30 qubits, you should be able to get to about 1000 ops. That’s sort of where we are now. The reason we don’t have 3200 yet is that if you have 3200 qubits, you should be able to do 10 million ops and that means your noise should be one part in 107. We’re not there yet. We have strategy to get there,” said Monroe.

While you could put more ions in a trap, controlling them becomes more difficult. Long chains of ions become soft and squishy. “A smaller chain is really stiff [and] much less noisy. So 32 is a good number. 16 might be a good number. 64 is a good number, but it’s going to be somewhere probably under 100 ions,” said Monroe.

The first part of the strategy for scaling is to have multiple chains on a chip that are separated by a millimeter or so which prevents crosstalk and permits local operations. “It’s sort of like a multi-core classical architecture, like the multi-core Pentium or something like that. This may sound exotic, but we actually physically move the atoms, we bring them together, the multiple chains to connect them. There’s no real wires. This is sort of the first [step] in rolling out a modular scale-up,” said Monroe.

In proof of concept work, IonQ announced the ability to arbitrarily move four chains of 16 atoms around in a trap, bringing them together and separating them without “losing” any of the atoms. “It wasn’t a surprise we were able to do that,” said Monroe. “But it does take some design in laying out the electrodes. It’s exactly like surfing, you know, the atoms are actually surfing on an electric field wave, and you have to design and implement that wave. That was that was the main result there. In 2022, we’re going to use that architecture in one of our new systems to actually do quantum computations.”

There are two more critical steps in IonQ’s plan for scaling. Error correction is one. Clustering the chips together into larger systems is the other. Monroe tackled the latter first.

“Think about modern datacenters, where you have a bunch of computers that are hooked together by optical fibers. That’s truly modular, because we can kind of plug and play with optical fibers,” said Monroe. He envisions something similar for trapped ion quantum computers. Frankly, everyone in the quantum computing community is looking at clustering approaches and how to use them effectively to scale smaller systems into larger ones.

“This interface between individual atom qubits and photonic qubits has been done. In fact, my lab at University of Maryland did this for the first time in 2007. That was 14 years ago. We know how to do this, how to move memory quantum bits of an atom onto a propagating photon and actually, you do it twice. If you have a chip over here and a chip over here, you bring two fibers together, and they interfere and you detect the photons. That basically makes these two photons entangled. We know how to do that.”

“Once we get to that level, then we’re sort of in manufacturing mode,” said Monroe. “We can stamp out chips. We imagine having a rack-mounted chips, probably multicore. Maybe we’ll have several 100 atoms on that chip, and a few of the atoms on the chip will be connected to optical conduits, and that allows us to connect to the next rack-mounted system,” he said.

They key enabler, said Monroe, is a nonblocking optical switch. “Think of it as an old telephone operator. They have, let’s say they have 100 input ports and 100 output ports. And the operator connects, connects with any input to any output. Now, there are a lot of connections, a lot of possibilities there. But these things exist, these automatic operators using mirrors, and so forth. They’re called n-by-n, nonblocking optical switches and you can reconfigure them,” he said.

“What’s cool about that is you can imagine having several hundred, rack-mounted, multi-core quantum computers, and you feed them into this optical switch, and you can then connect any multi-core chip to any other multi-core chip. The software can tell you exactly how you want to network. That’s very powerful as an architecture because we have a so-called full connection there. We won’t have to move information to nearest neighbor and shuttle it around to swap; we can just do it directly, no matter where you are,” said Monroe.

The third leg is error correction, which without question is a daunting challenge throughout quantum computing. The relative unreliability of qubits means you need many redundant physical qubits – estimates vary widely on how many – to have a single reliable logical qubit. Ions are among the better behaving qubits. For starters, all the ions are literally identical and not subject to manufacturing defects. A slight downside is that Ion qubit switching speed is slower than other modalities, which some observers say may hamper efficient error correction.

Said Monroe, “The nice thing about trapped ion qubits is their errors are already pretty good natively. Passively, without any fancy stuff, we can get to three or four nines[i] before we run into problems.”

“What are those problems? I don’t want to say they’re fundamental, but there are brick walls that require a totally different architecture to get around,” said Monroe. “But we don’t need to get better than three or four nines because of error correction. This is sort of a software encoding. The price you pay for error correction, just like in classical error correction encoding, is you need a lot more bits to redundantly encode. The same is true in quantum. Unfortunately, with quantum there are many more ways you can have an error.”

Just how many physical qubits are needed for a logical qubit is something of an open question.

“It depends what you mean by logical qubit. There’s a difference in philosophy in the way we’re going forward compared to many other platforms. Some people have this idea of fault tolerant quantum computing, which means that you can compute infinitely long if you want. It’s a beautiful theoretical result. If you encode in a certain way, with enough overhead, you can actually you can run gates as long as you want. But to get to that level, the overhead is something like 100,000 to one, [and] in some cases a million to one, but that logical qubit is perfect, and you get to go as far as you want [in terms of number of gate operations],” he said.

IonQ is taking a different tack that leverages software more than hardware thanks to ions’ stability and less noisy overall support system [ion trap]. He likens improving qubit quality to “buying a nine” in the commonly-used ‘five nines’ vernacular of reliability. Five nines – 99.999 percent (five nines) – is used describe availability, or put another way, time between shutdowns because of error.

“We’re going to gradually leak in error correction only as needed. So we’re going to buy a nine with an overhead of about 16 physical qubits to one logical qubit. With another overhead of 32 to one, we can buy another nine. By then we will have five nines and several 100 logical qubits. This is where things are going to get interesting, because then we can do algorithms that you can’t simulate classically, [such] as some of these financial models we’re doing now. This is optimizing some function, but it’s doing better than the classical result. That’s where we think we will be at that point,” he said.

Monroe didn’t go into detail about exactly how IonQ does this, but he emphasized that software is the big driver now at IonQ. “Our whole approach at IonQ is to throw everything up to software as much as we can. That’s because we have these perfectly replicable atomic qubits, and we don’t have manufacturing errors, we don’t have to worry about a yield or anything like that everything is a control problem.”

So how big a system do you need to run practical applications?

“That’s a really good question, because I can safely say we don’t exactly know the answer to that. What we do know if you get to about 100 qubits, maybe 72, or something like that, and these qubits are good enough, meaning that you can do 10s of 1000s of ops. Remember, with 100 qubits you want to do about 10,000 ops to something you can’t simulate classically. This is where you might deploy some machine learning techniques that you would never be able to do classically. That’s probably where the lowest hanging fruit are,” said Monroe.

“Now for us to get to 100 [good] qubits and say 50,000 Ops, that requires about 1000 physical qubits, maybe 1500 physical qubits. We’re looking at 1200 physical qubits, and this might be 16 cores with 64 ions in each core before we have to go to photonic connections. But the photonic connection is the key because [it’s] where you start to have a truly modular data center. You can stamp these things out. At that point, we’re just going to be making these things like crazy, and wiring them together. I think we’ll be able to do interesting things before we get to that stage and it will be important if we can show some kind of value (application results/progress) and that we have the recipe for scaling indefinitely, that’s a big deal,” he said.

It is probably going too far to say that Monroe believes scaling up IonQ’s quantum computer is now ‘just’ a straightforward engineering task, but it sometimes sounds that way. The biggest technical challenges, he suggests, are largely solved. Presumably, IonQ will successfully demonstrate its modular architecture in 2022. He said competing approaches – superconducting and all-photonics, for example – won’t be able to scale. “They are stuck,” he said.

“I think they will see atomic systems as being less exotic than they once thought. I mean, we think of computers as built from silicon and as solid state. For better for worse you have companies that that forgot that they supposed to build computers, not silicon or superconductors. I think we’re going to see a lot more fierce competition on our own turf,” said Monroe. There are ion trap rivals. Honeywell is one such rival (Honeywell has announced plans to merge with Cambridge Quantum),” said Monroe.

His view of the long-term is interesting. As science and hardware issues are solved, software will become the driver. IonQ already has a substantial software team. The company uses machine learning now to program its control system elements such as the laser pulses and connectivity. “We’re going to be a software company in the long haul, and I’m pretty happy with that,” said Monroe.

IonQ has already integrated with the three big cloud providers’ (AWS, Google, Microsoft) quantum offerings and embraced the growing ecosystem of software and tools providers and has APIs for use with a variety of tools. Monroe, like many in the quantum community, is optimistic but not especially precise about when practical applications will appear. Sometime in the next three years is a good guess, he suggests. As for which application area will be first, it may not matter in the sense that he thinks as soon as one domain shows benefit (e.g. finance or ML) other domains will rush in.

These are heady times at IonQ, as they are throughout quantum computing. Stay tuned.

[i] He likens improving qubit quality to “buying a nine” in the commonly-used ‘five nines’ vernacular of reliability. Five nines – 99.999 percent (five nines) – is used describe availability, or put another way, time between shutdowns because of error.